Startup’s processor leverages artificial intelligence to solve application-specific problems of voice and sound.

What’s at stake?

“Edge AI” is the latest trend to sweep the Internet of Things (IoT), embedded, and AI markets, lending urgency to the need to examine how one edge AI approach differs from another. A startup called AONDevices is taking a pragmatic path with an ASIC that excels in power consumption, processing power, and recognition accuracy.

Talking AI isn’t enough. “Artificial intelligence on the edge” is the thing that is deemed crucial to reducing latency and bandwidth, improving reliability, and increasing data security and privacy while cutting power drain in edge computing.

But not all “edge AI” is created equal.

Approaches differ on where and how to place AI on the edge. Some vendors use their own MCUs, others embed AI on wireless SoCs. Many AI startups want their AI processors designed into end systems.

The problem is that with the emphasis on implementation, the results – in power consumption, processing speed and AI algorithm performance — might disappoint system OEMs, cautioned Mouna El Khatib, CEO of AONDevices (AON), in a recent interview with the Ojo-Yoshida Report.

Application-specific AI processor

AON is a fabless chip company based in Irvine, Calif., specializing in application-specific edge-AI processors. AON describes its mission as “enabling edge AI for voice activation and sound recognition in battery-powered devices.”

Unlike many AI chip startups whose primary goal is to design a neural-network processor before exploring applications, El Khatib knew from the start the problems her processor had to solve. “I wanted to create a solution that guarantees any pattern recognition out of the box,” she said.

For AON, AI was not the goal, but the means to solve application-specific problems.

Prior to founding AON, El Khatib was a lead engineer working on voice, audio and hardware DSP SoCs at Connexant and Qualcomm, in addition to a brief stint at Brainchip. Her hands-on experience taught her the constraints, limitations and booby traps of AI at the edge.

If [edge AI] doesn’t work all the time, or if it’s not accurate, people don’t trust it.”

Mouna El Khatib

Edge AI often triggers “a lot of finger-pointing among hardware, software and algorithm developers,” she said. It can be enabled by audio DSP processors that require extensive hand-coding of software and general-purpose CPUs that burn too much power. A hybrid approach with a neural-network accelerator could entail complicated tuning. Mismatches between processors and algorithms — hardware not powerful enough to run algorithms or third-party algorithms too big to fit on a generic processor — are not unusual.

The worst outcome is poor recognition performance. “If it doesn’t work all the time, or if it’s not accurate, people don’t trust edge AI,” said El Khatib.

Hence, she explained, AON has developed solutions that embed algorithms in an application-specific integrated circuit (ASIC). “We regard co-design of algorithms and hardware critical” for offering high-performance voice and sound recognition at ultralow power, she noted.

AON’s product offering

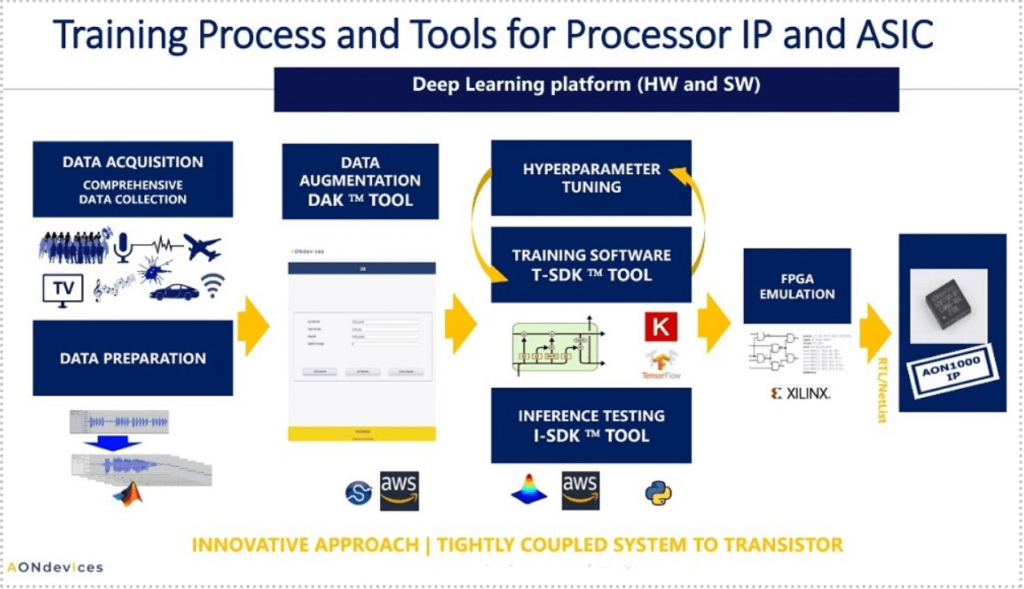

AON offers its edge-AI processor in two forms: as intellectual property (IP) and as a machine-learning chip. The neural-processing ASIC is tightly coupled with a deep-learning algorithm.

AON claims that its ultralow-power and multimodal chip can simultaneously handle multiple voice commands, sound events and sensor fusion. By adding another sensor – such as an accelerometer— AON’s multicore AI can process gesture recognition, adding context to recognized sound.

How AON’s software and hardware work in tandem (Source: AONDevices)

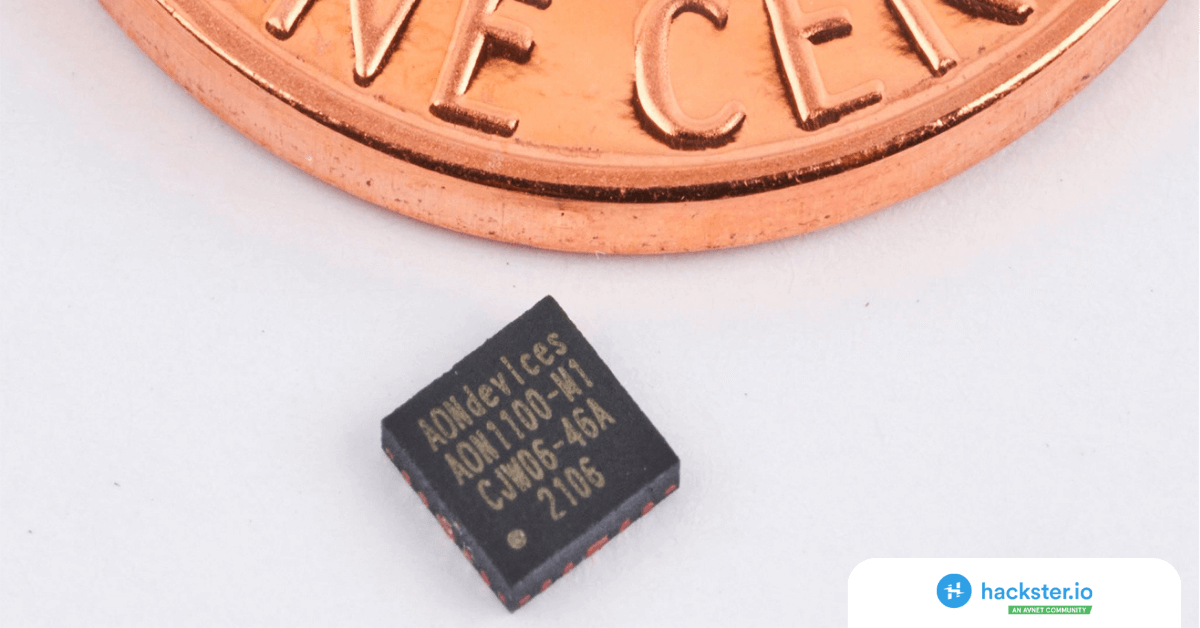

AON’s neural-processing ASIC, dubbed AON1100, achieves a “90 percent hit rate in 0dB signal-to-noise ratio by using a single microphone,” claimed El Khatib, “instead of two microphones many companies rely on.”

The chip, fabricated in a 40nm process, consumes 260 µW. Asked about the cost of adding AON’s SoC in products, the CEO said that, depending on volume, the AON1100 costs $1 to $2.

Under the radar

In an industry climate accustomed to measuring the worth and technology of AI chip startups by funds raised, AON has lingered under the radar.

As it turns out, AON, founded in 2018 with $2.6 million seed funding, had no need for fund-raising because it already had three paying, high-volume customers — two chip vendors and a large mobile OEM.

One customer is Dialog Semiconductor. According to AON’s website, Dialog is integrating AI into the company’s advanced wireless communication ICs to “offer voice activity and hot-word detection at the edge for all applications.”

Asked about that large mobile (unnamed) OEM customer, El Khatib explained that it is licensing AON’s IP to integrate into its own SoC.

AON is currently raising Series A funding, aiming for $10 million to $15 million, noted the CEO.

AON’s management team consists of seasoned executives who previously worked at companies such as Connexant, Qualcomm, Intel and Broadcom. COO Ziad Mansour, for example, served as a senior vice president of engineering, leading the digital hardware group in Qualcomm’s CDMA Technologies (QCT) division. Khatib talked him into joining AON out of his retirement.

Evolution of speech/voice recognition

Fortune Business Insights predicts the speech/voice recognition market will grow from $6.9 billion in 2018 to $283 billion in 2026.

Behind this double-digit compound annual growth rate lies a host of innovations that have prompted its evolution. Starting out in smart speakers and smartphones such as “Hey Siri,” or “OK, Google,” voice-recognition technology has advanced to on-device sound recognition in earbuds, smartphones and IoT. Now, the technology can offer on-device voice command with or without a wake word on remote controls or headsets.

The future, as AON sees it, is in devices that support “simultaneous multiple wake words, voice commands, sound recognition and sensor fusion.” Such devices include hearables, wearables, machines, robots, even vehicles.

Given the rapid progress in voice-recognition technology and its burgeoning uses, El Khatib posed a few ground rules for a future in which edge-AI solutions will become ubiquitous. “First, it must be always on, and it must work at ultralow power.”

Second, she said, “We need to enable voice and sound recognition without the use of noise suppression.” The real world is messy and noisy. Voice and sound recognition must be able to withstand that reality. More important, sound recognition in earbuds and hearables should be able to alert the user to external hazards like honking horns and crying babies by automatically disabling noise cancellation and lowering the volume — trading music quality for safety.

Competitive landscape

In addition to a host of new AI processor companies, many MCU suppliers are looking to enable AI on the edge.

A slew of SoC vendors are considering the integration of machine learning (ML) into edge devices, calling this trend “one of the highly anticipated developments in IoT.” Silicon Labs, for example, is offering wireless SoCs with a built-in AI/ML hardware accelerator.

Adding a hardware accelerator as a coprocessor to offload the MCU is a popular approach to enable AI/ML. But it comes with caveats.

El Khatib explained, “In general, these hardware accelerators still require a significant amount of processing in the MCU, causing higher power and higher latency.” The main issue, she said, is that this is a generic system requiring OEMs to find and embed their own algorithms. Chip companies ask OEMs to source tools from third parties while remaining responsible for the final system. “Basically, system companies who bought the pieces must make sure the solution that they put together works,” said El Khatib.

AON’s mission is to avoid complications by offering an entire solution: hardware, algorithm, tools and software designed to work together. “The customers just define the use case, provide the data to the tool set and quickly get the use case up and running,” El Khatib claimed.

Meanwhile, smartphone companies like Apple are running AI/ML software on their application processors. The iPhone, for example, can run sound recognition, detecting things like glass breaking, a smoke alarm or a baby crying. In a video demo comparing its own solution with an iOS15-based iPhone, AON showed that while the iPhone is detecting sound, its user can’t use voice recognition (“Hey, Siri”). In the demo, the iPhone also suffered a delay in sound detection and recognition. But the biggest issue is power drain, El Khatib noted. “Running the software in the app processor wouldn’t be best for power.”

Bottom line:

AON has yet to disclose details of its processing architecture to anyone not signing a nondisclosure agreement. But among AI startups, this one stands apart because it’s not competing on TOPS (tera operations per second) or chasing funding. With a clear focus on specific applications — voice and sound recognition at ultralow power — AON is taking the practical route to edge AI.

Junko Yoshida is the editor-in-chief of The Ojo-Yoshida Report.

She can be reached at junko@ojoyoshidareport.com